Ensure safe AI use by intercepting prompts with sensitive data before they're sent to third-party AI tools

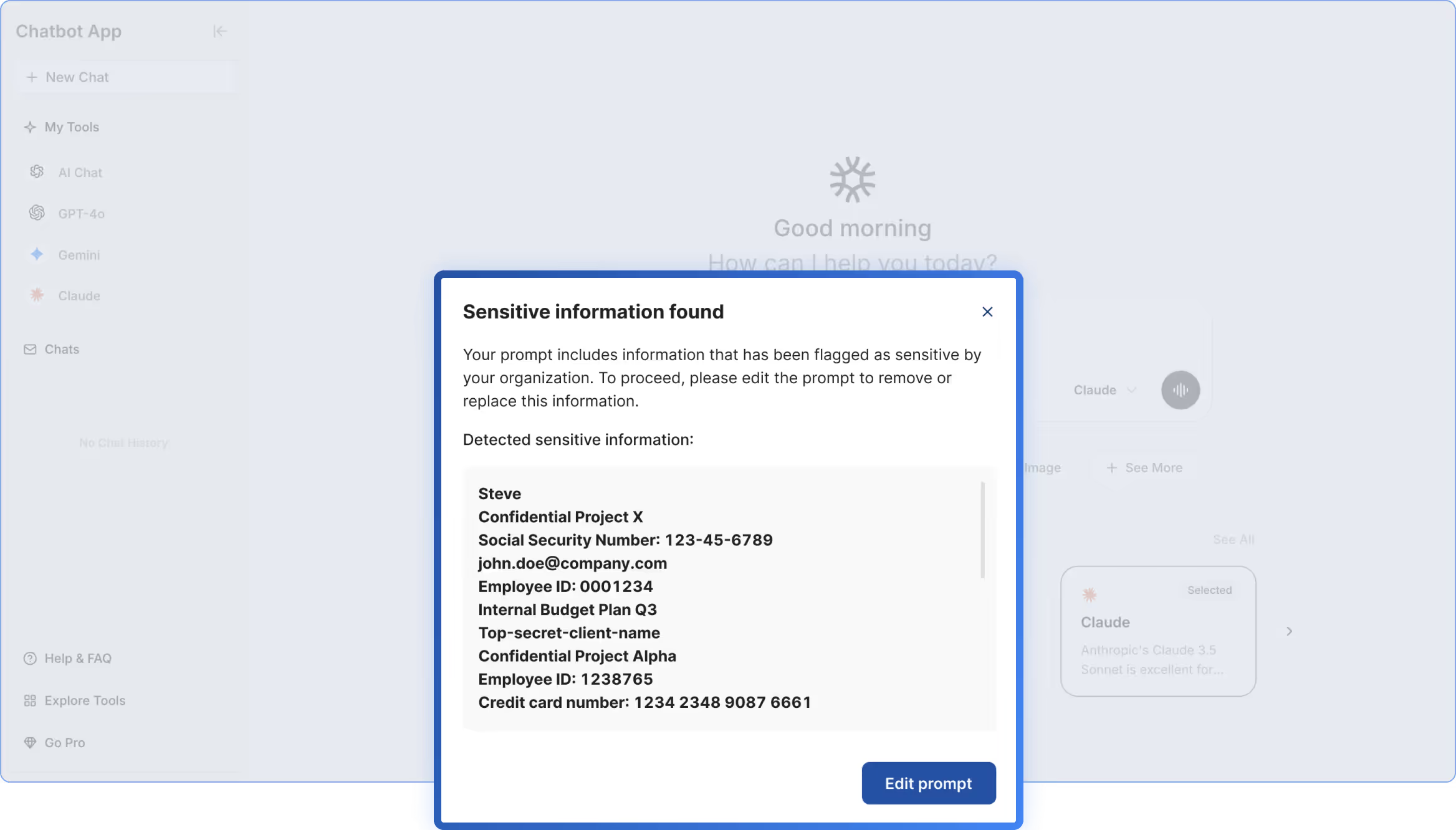

Ensure safe AI prompting by prohibiting users from inputting classified data into GenAI chatbot prompts based on custom keyword recognition or data typesets.

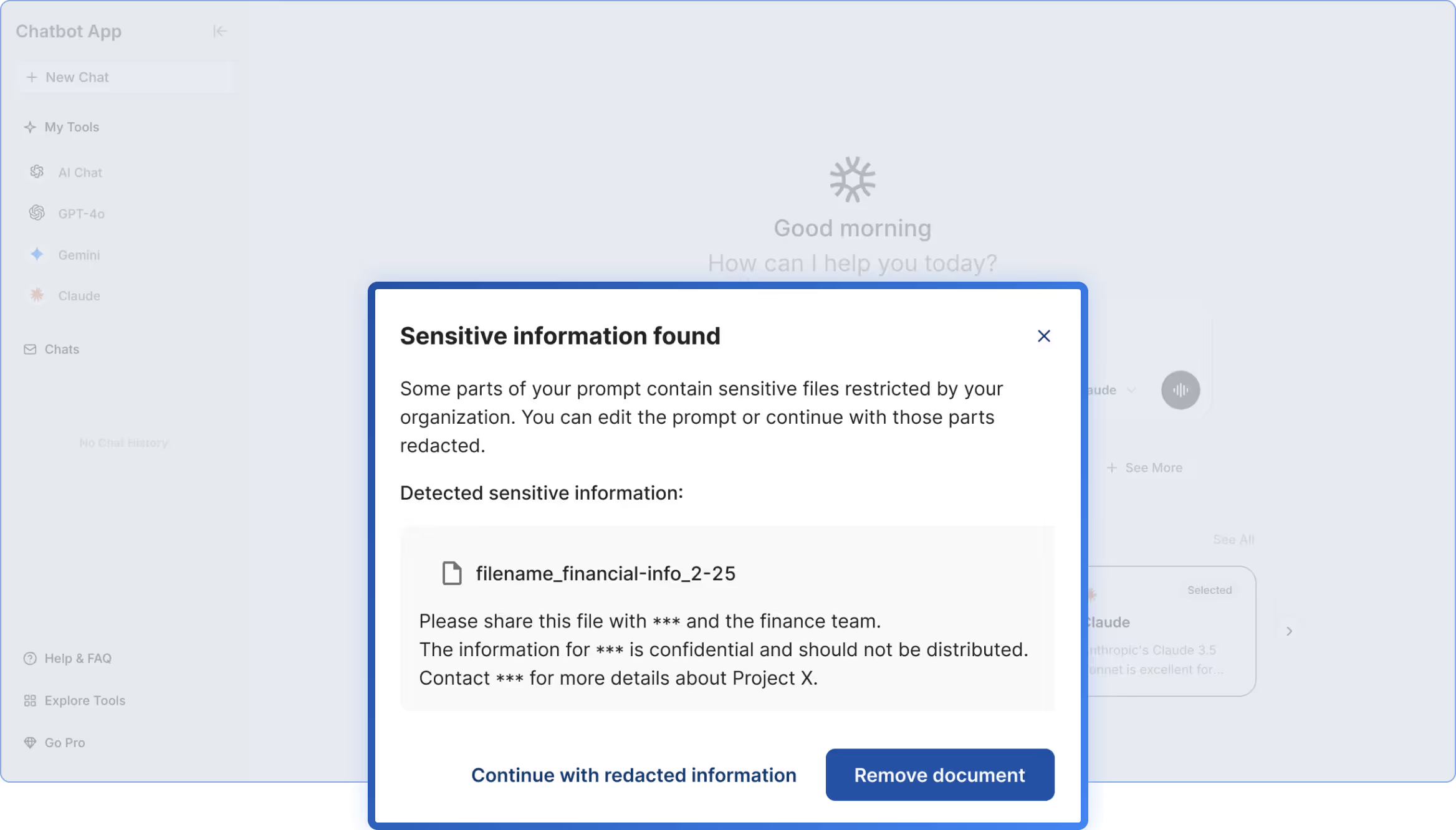

Match AI productivity with safety by blocking users from submitting AI prompts that include documents containing sensitive or classified data.

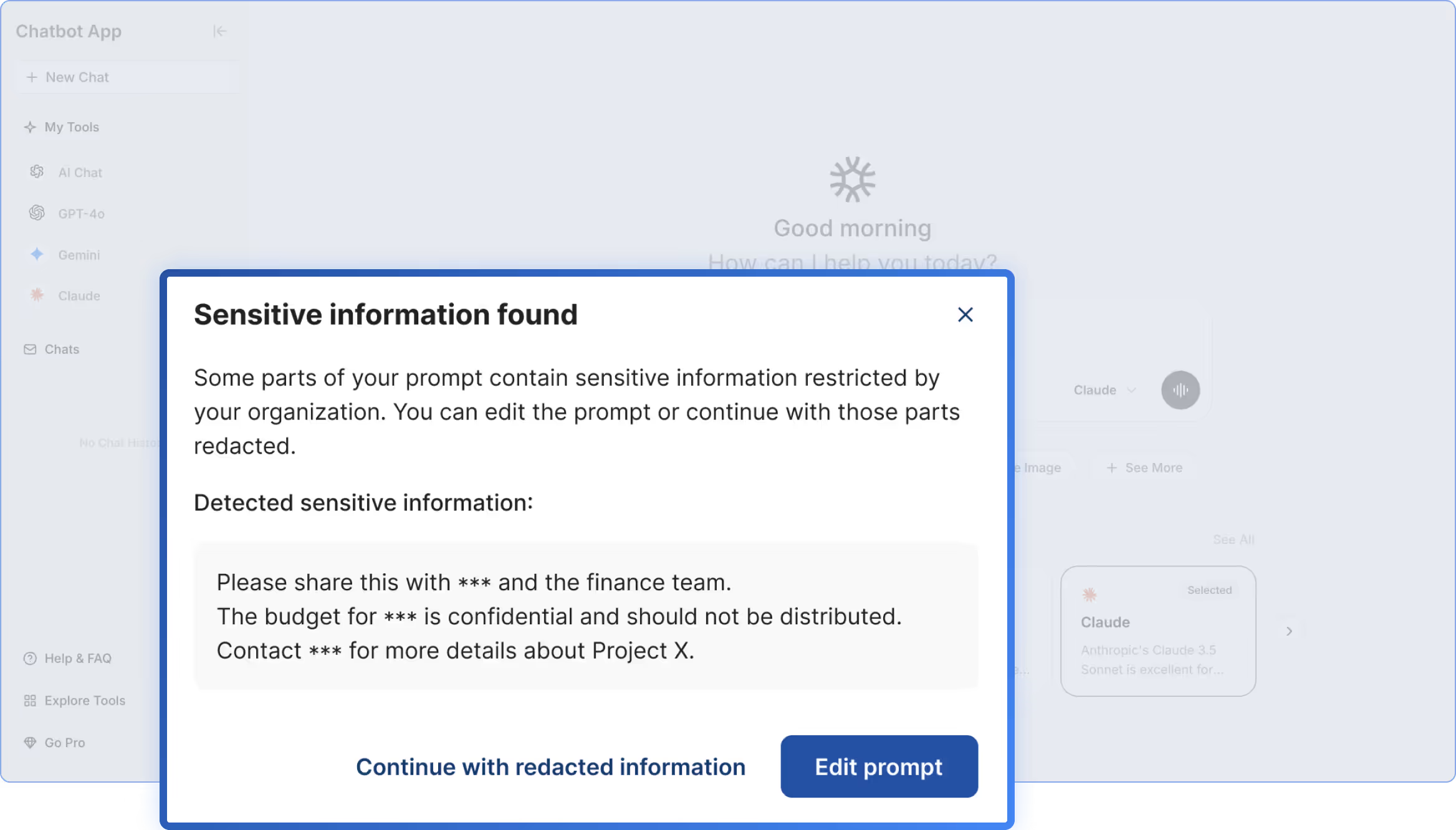

Embed customizable in-browser alerts to track activity, warn users, or restrict unsafe prompts to help employees use GenAI tools responsibly and securely.